As of mid-August 2021, various Facebook accounts, pages, and groups, are still hosting content promoting the cluster of far-right conspiracy theories known as QAnon. This flies in the face of Facebook's Oct. 6, 2020, announcement stating that the company would remove content "representing QAnon," even if those posts or accounts "contained no violent content."

It's true that various QAnon keywords and hashtags such as "#WWG1WGA" ("Where We Go One We Go All") have been disabled in Facebook's search tool. Instead of seeing search results when looking for these terms, users are shown a link to Facebook's Community Standards.

However, while no results show up in these searches on Facebook, we found that QAnon content is still hosted by the platform. Users need to do little more than find one post to begin falling down a rabbit hole of deception and lies. Basically, once a single QAnon post is found, it's dangerously simple to follow the trail of likes, comments, and accounts, without the need to do any searching whatsoever.

The idea behind QAnon, as The New York Times described it, is about false allegations "that the world is run by a cabal of Satan-worshiping pedophiles." With this falsehood comes a number of hashtags such as #SaveTheChildren and #SaveOurChildren, for example.

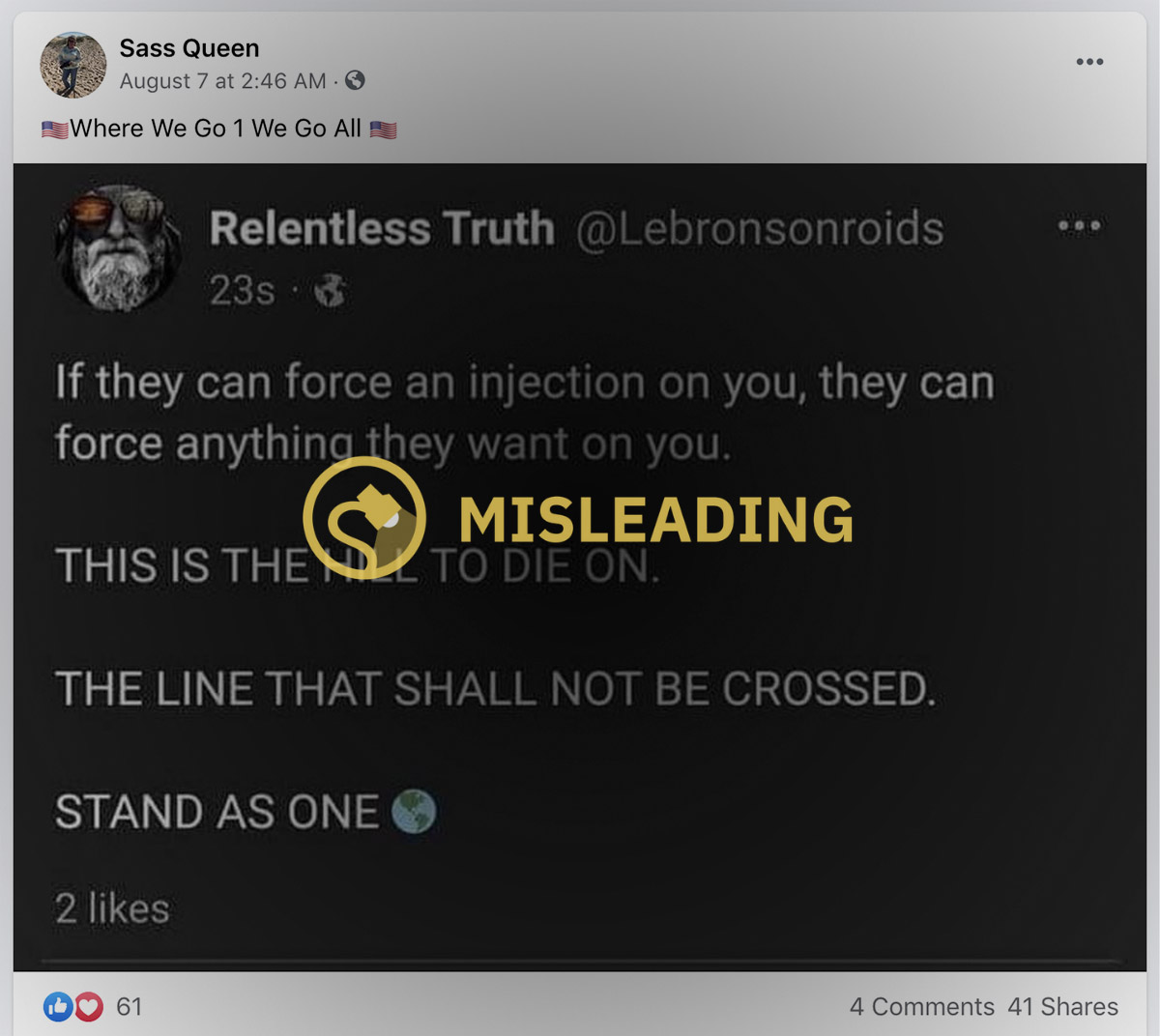

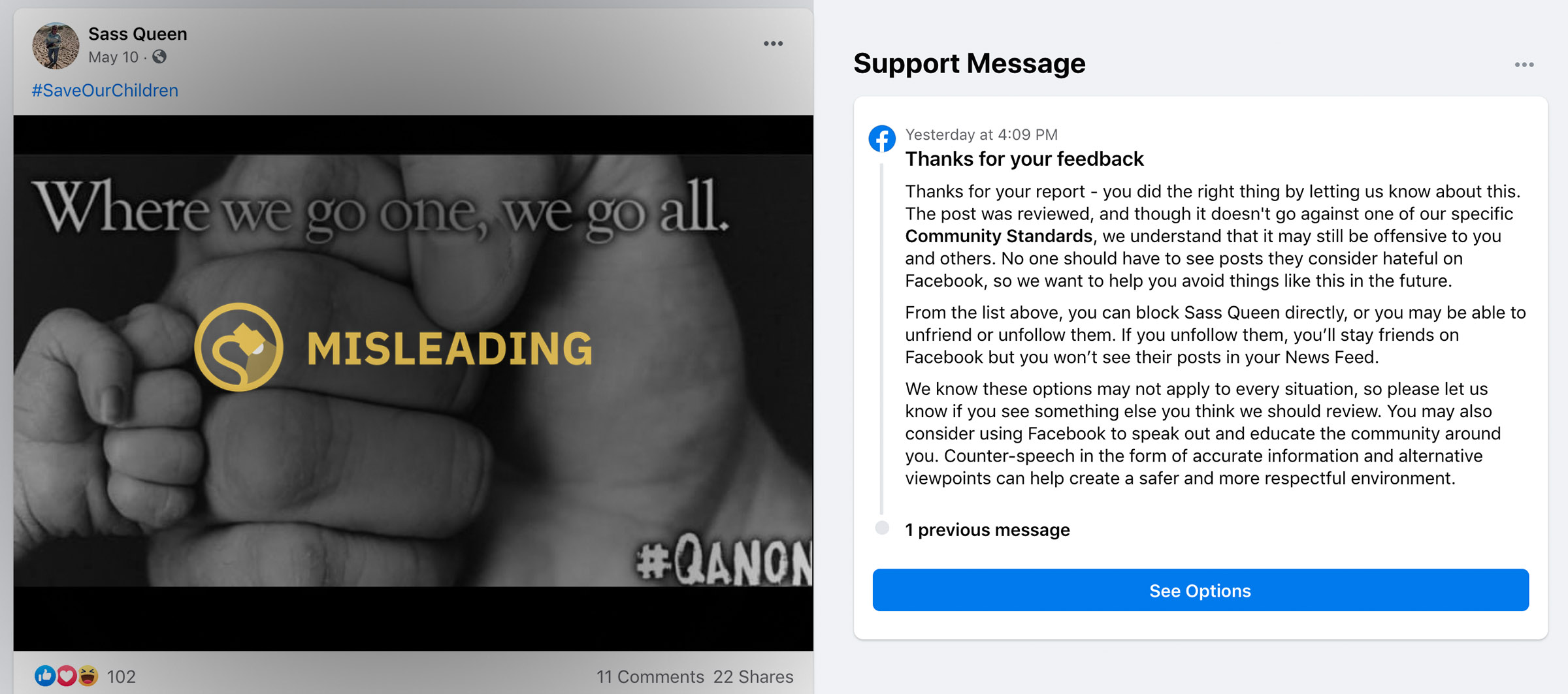

We easily located a number of Facebook posts that mentioned #SaveOurChildren with #WWG1WGA. In fact, we found multiple posts from a single user over the course of several months that all referenced QAnon.

Finding this account led us to a QAnon Facebook group named Digital Soldiers United, which had a giant cover image that read: "Save The Children." We also found a large number of posts within the public group that carried the hashtag "#SaveTheChildren."

In another Facebook group named Trump MAGA Supporters, members shared frog emojis to represent the QAnon mascot, Pepe the Frog. They also mixed vaccine misinformation with QAnon rhetoric and constantly mentioned "the great awakening," which is part of the QAnon conspiracy theory.

From these groups and accounts, a Facebook user can follow a rabbit hole into the world of QAnon. Clicking on the accounts of people who liked or commented on the QAnon posts led us to more memes and links.

Further, the sampling of findings in this story perhaps only scratch the surface of the breadth of QAnon content that's hosted by Facebook. According to a DFRLab report from Jared Holt and Max Rizzuto, a lot of the old phrases and keywords that were once associated with QAnon have changed and evolved. The findings were first published on May 26, 2021.

Holt and Rizzuto published that a "neo-QAnon" now exists, described as "a cluster of loosely connected conspiracy theory-driven movements that advocate many of the same false claims without the hallmark linguistic stylings that defined QAnon communities during their years of growth."

It's unclear why Facebook and its "30,000 or 35,000 people" who are employed to review content are unable to find and take action on QAnon posts and groups like these. Those figures, according to Facebook CEO Mark Zuckerberg in a July 2021 interview with The Verge, are the number of people who review Facebook content to see if any action needs to be taken.

Beyond all of this, we manually reported a handful of these QAnon posts using Facebook's reporting tool, which is available to all users. In 100 percent of the responses that we received from Facebook's support team, the company claimed that the QAnon content "doesn't go against one of our specific Community Standards." In reality, all of the posts were very much against Facebook's policies.

In our past research, Facebook's reporting tool has long been shown to be extremely ineffective. For example, during our exclusive investigation of "The BL," we manually submitted reports to the company for hundreds of fake accounts. Facebook's support team responses initially claimed that around 95 percent of the accounts were not fake, even though they were. Company employees with higher-ranking job titles finally appeared to step in and remove all of the fake accounts weeks later.

Facebook announced the ban of "The BL" weeks after we first filed our first report and notified them by email. It was perhaps the largest foreign disinformation network takedown in Facebook history, involving 55 million followers and $9.5 million in Facebook ads. The news appeared to be mostly lost in the blur of the holiday season, as the ban was announced by Facebook on the Friday afternoon before Christmas in 2019.

It's unclear why Facebook and its "30,000 to 35,000" content review employees don't take a more proactive approach regarding finding and removing dangerous content, especially considering the gravity of recent national and world news and events. However, until that day comes, we will continue to report our findings to the company.

We emailed Facebook about the QAnon rhetoric mentioned in this story. After we reached out, the company appeared to remove much of the policy-breaking content.

A Facebook company spokesperson did not respond directly to our questions. Instead, they sent this statement: "We remove Pages, groups and Instagram accounts that represent QAnon when we identify them and disable the profiles who admin them. We recognize that this is an evolving movement and there are always going to be efforts to circumvent our enforcement. That’s why we work to identify new terms and partner with external experts to study its evolution so we can keep more of this content off of our platform."